When the AI says you're fine

AI for pain measurement in clinical settings

This morning, I published a piece in MIT Technology Review on AI pain assessment tools, including how they work, who’s using them, and the clinical results so far. This is a companion piece focused on the questions these tools raise: what happens when we quantify something we don’t fully understand, and what clinical judgment might we lose in the process?

We don’t actually understand pain all that well.

We have a model of the basic process, of course. We know that when you stub your toe, for example, microscopic alarm bells called nociceptors send electrical impulses toward your spinal cord, delivering the first stab of pain, while a slower convoy follows with the duller throb that lingers. At the spinal cord, the signal passes through a kind of neural checkpoint — the “gate.” Depending on what else your nerves are doing and what your brain instructs, that gate can amplify or dampen the pain before it ever reaches your brain.

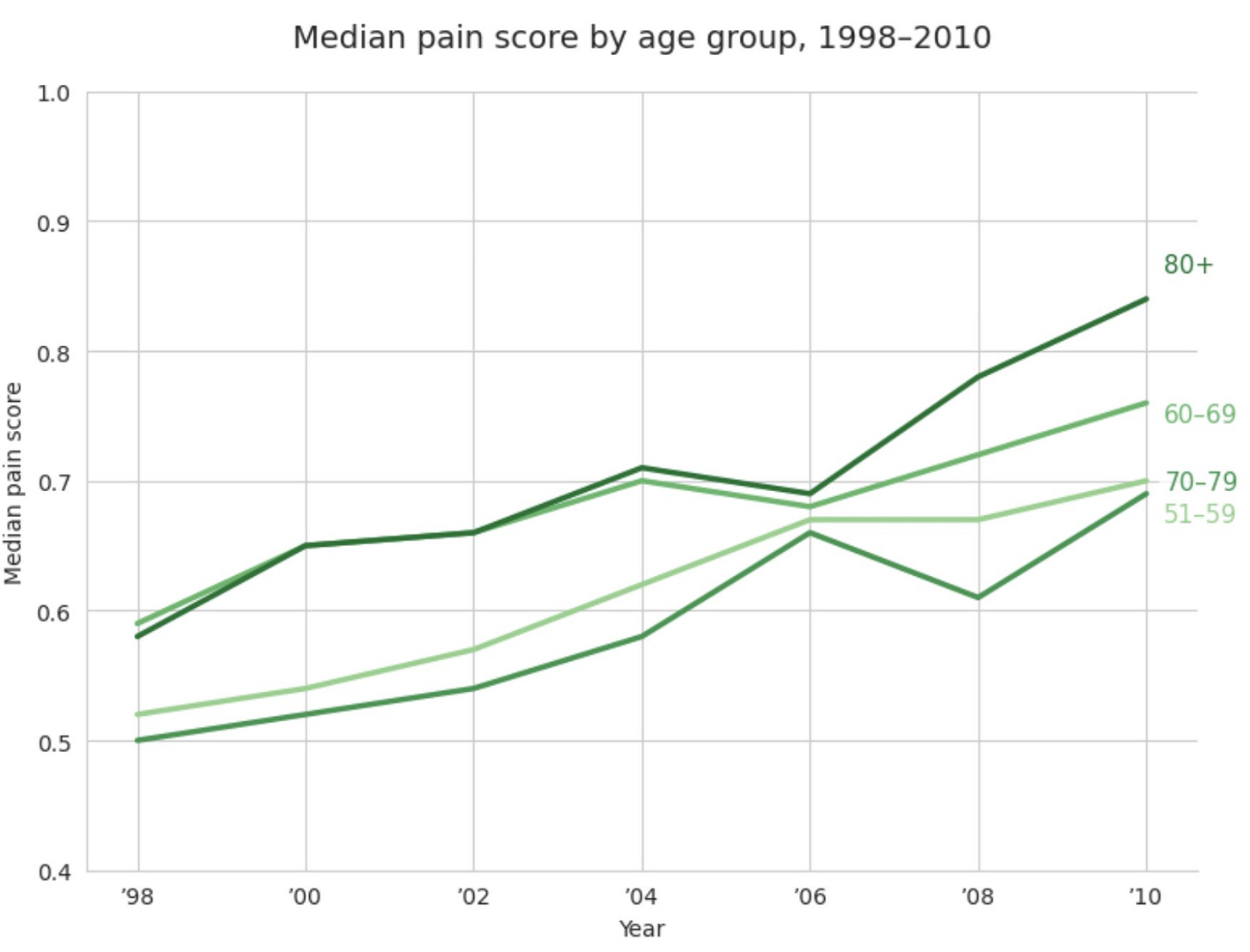

But there’s a lot we don’t know, especially for something that one in five U.S. adults report experiencing every day or most days. For example, scientists can’t predict what causes someone to slip from a normal injury into years of hypersensitivity. Some research suggests that genetics, past injuries, and a person’s environment, stress levels, and even their thoughts and feelings might all contribute to whether they develop chronic pain. Americans have also been experiencing more of it over time, and we’re not entirely sure why.

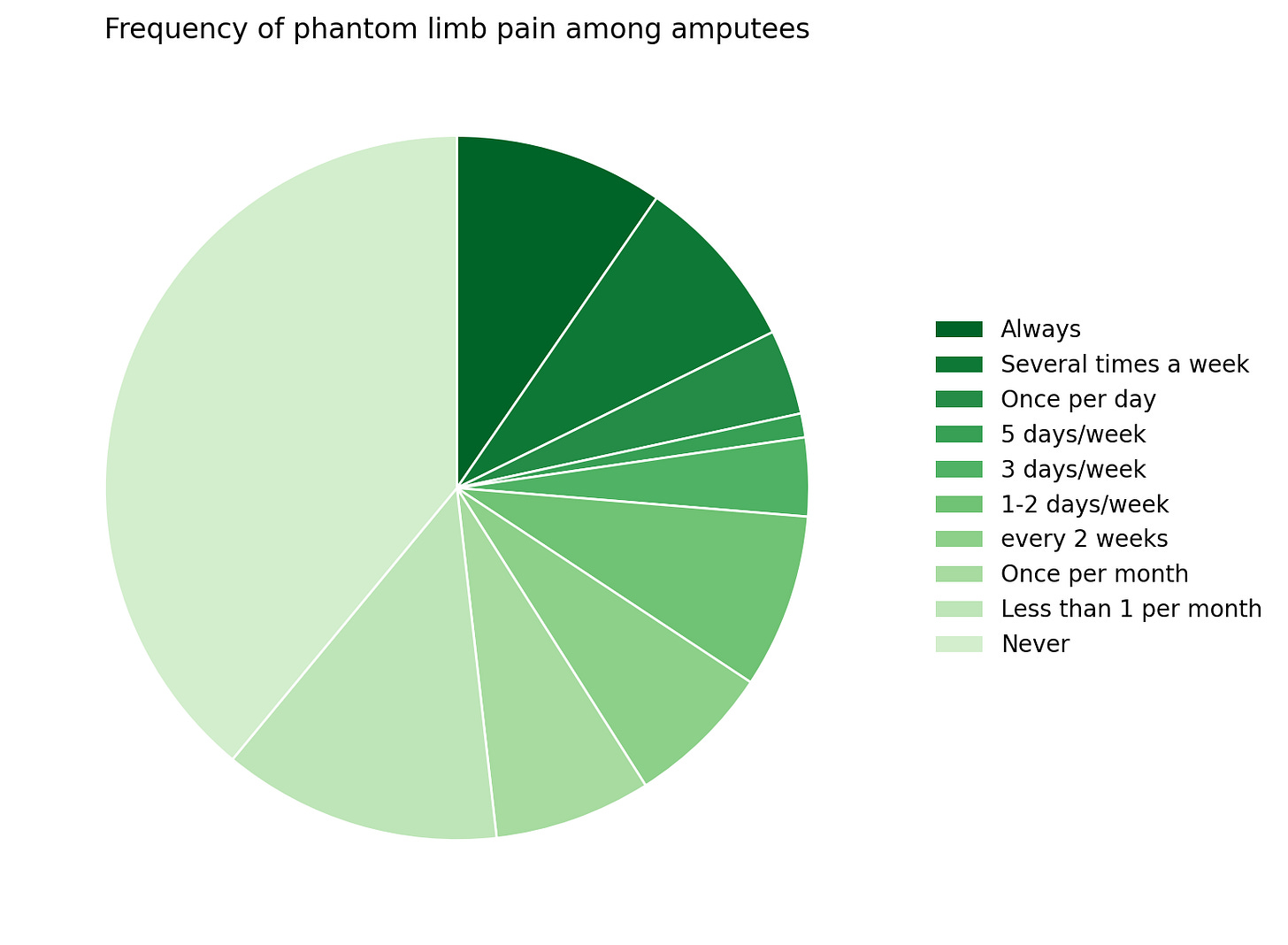

Phantom-limb pain remains equally opaque. About two-thirds of amputees feel agony in a part of their body that no longer exists, and yet the competing theories we currently have don’t fully explain why the other third feel nothing.

There is some identified link between having red hair and lower pain tolerance and red heads need up to 20% more anesthesia. Congenital insensitivity to pain, a rare genetic disorder, makes some unable to feel pain at all. Outlandishly, a Scottish woman named Jo Cameron is purportedly incapable of suffering.

Pain is also stubbornly subjective. Feedback from the brain in the form of your reaction can change how you physically feel, meaning that expectation and emotion can change how much the same injury hurts. And pain can also be affected by a slew of external factors. In one study, experimenters applied the same calibrated stimulus to volunteers from Italy, Sweden, and Saudi Arabia, and the ratings varied dramatically. Italian women recorded the highest pain scores, while Swedish and Saudi participants judged the identical burn several points lower, implying that culture can amplify or dampen the felt intensity of the same experience.

How much pain you expect to feel can also directly alter your experience: in one trial, volunteers who believed they had received a pain relief cream reported a stimulus as 22% less painful than those who knew the cream was inactive — and a functional magnetic resonance image (fMRI) of their brains showed that the drop corresponded with less activity in the parts of the brain that report pain, meaning they really did feel less hurt.

All of this to say, our understanding of pain is far from complete and the phenomenon is far from straightforward.

How much does it hurt?

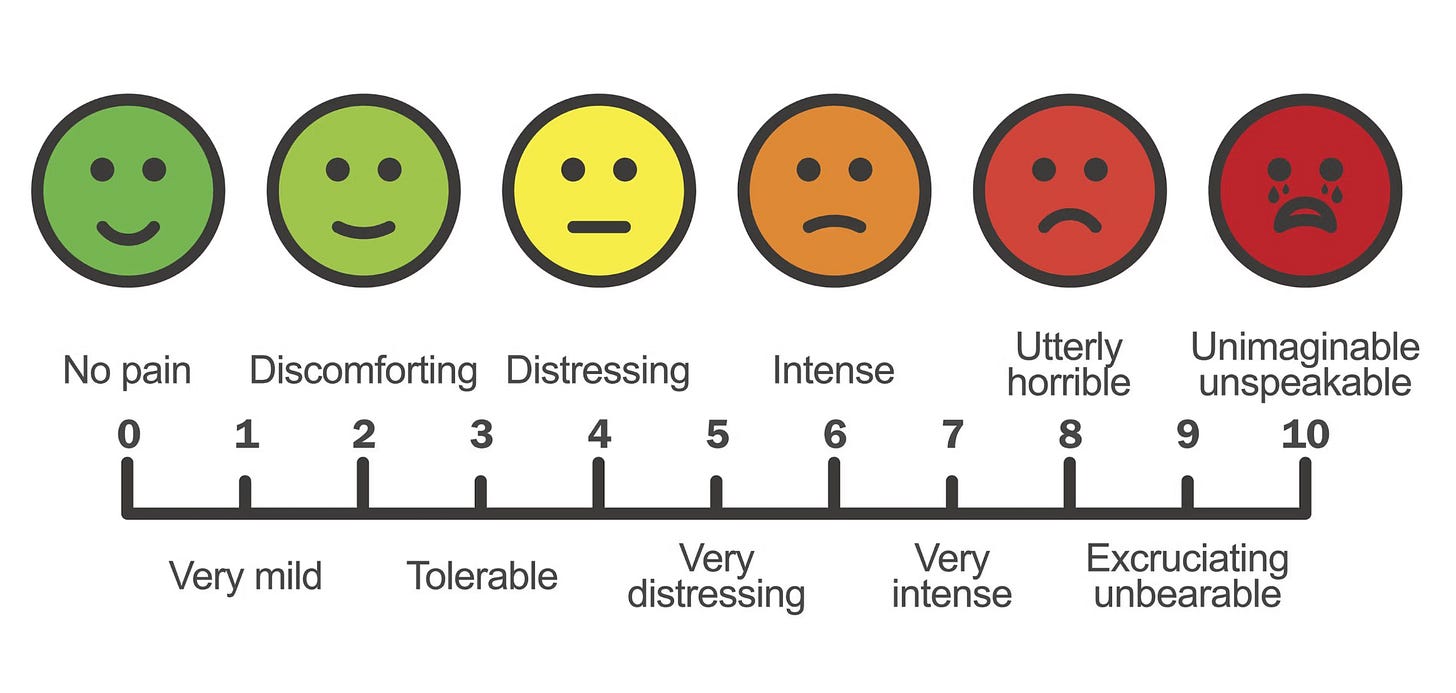

We usually measure pain on a self-reported 1-10 scale, where 1 is very mild and 10 is the worst pain imaginable.

This is the standard of care for a reason. Pain scales are relatively quick and easy to use, they provide us with some basic information about how much pain someone feels they are in at the moment, and they can track relative pain for the same person over time reasonably well.

On the other hand, some people can rank their pain at an 8 out of 10 and still go to work, while others can’t get out of bed with a score of 4. How people interpret the question, their personal pain tolerance, and fluctuations throughout the day all influence the answer in ways that make it hard to use clinically.

It’s also hard to make the scale concrete, because people often have had different experiences to reference. For example, we couldn’t say ‘breaking a bone is a 5’ because many people haven’t broken a bone, and those who have might have had wildly different experiences depending on which bone, under what circumstances, and so on.

Some start-ups are trying to change this, making pain a little more legible. Using AI, they provide direct inferences on how much pain a person is in on that same numerical scale through readings of things like facial expressions, pulse, heart rate, or body temperature. Clinicians can then use the output to inform medical treatment plans.

I wrote about this in an article out today in MIT Technology review:

PainChek, one of these behavioral models, is already being used in health care settings. It acts like a camera‑based thermometer, but for pain: A care worker opens an app and holds a phone 30 centimeters from a person’s face. For three seconds, a neural network looks for nine microscopic movements—upper‑lip raise, brow pinch, cheek tension, and so on—that research has linked most strongly to pain. Then the screen flashes a score of 0 to 42. “There’s a catalog of ‘action‑unit codes’—about 52 facial expressions common to all humans. Nine of those are associated with pain,” explains co‑inventor and senior research scientist Kreshnik Hoti. This is built directly on the foundation of FACS. After the scan, the app walks the user through a yes‑or‑no checklist of other signs, like groaning, guarding, and sleep disruption, and stores the result on a cloud dashboard that can show trends.

These companies are initially targeting patient populations that can’t communicate — like those in dementia care, infants, and surgical patients under anesthetics.

I talk about the types of technologies at play and how they’re being used in more depth in the article. But beyond their technical promise, I’m interested in what they might change about how we understand pain itself.

Automating empathy

There’s some reason for unease: what happens when we start delegating the measurement of pain, which we can’t even define precisely, to algorithms?

These systems inherit the limits of the data that train them. Across domains, from self-driving cars to voice recognition, machine-learning models consistently perform worst on the kinds of cases they’ve seen least. This can mean consistently worse performance on groups as widely ranging as people with darker skin tones, children, or stroke survivors. In the context of pain measurement, that might mean underestimating pain in patients whose facial expressions or vital signs differ systematically from those represented in training data.

On the other hand, people’s self-reported pain scores and clinicians reactions to them are notably biased. Like I mentioned before, things like culture, experience, or expectations can all alter what someone reports their pain level to be. And bias inside the clinic can drive different responses even to the same pain score. A 2024 analysis of discharge notes found that women’s pain scores were recorded 10% less often than men’s. At a large pediatric emergency department, Black children presenting with limb fractures were roughly 39% less likely to receive an opioid analgesic than their white non-Hispanic peers, controlling for pain score and other clinical factors. These algorithmic outputs might be systematically biased, but so are humans.

Relatedly, as I noted in my previous article on radiology, having a model output to refer to can change clinician behavior. Once a pain score appears on a screen, clinicians may defer to it more than they should, even when their judgement would steer them in a different direction. This “automation bias” has been documented across fields: people trust machine output more than it might warrant. One review found that when a system gave incorrect guidance, clinicians were 26 percent more likely to make a wrong decision than unaided peers. With a specific numerical output, there’s an added risk of false precision. Getting a 7.5 out of 10 might feel like much more information than the number actually contains.

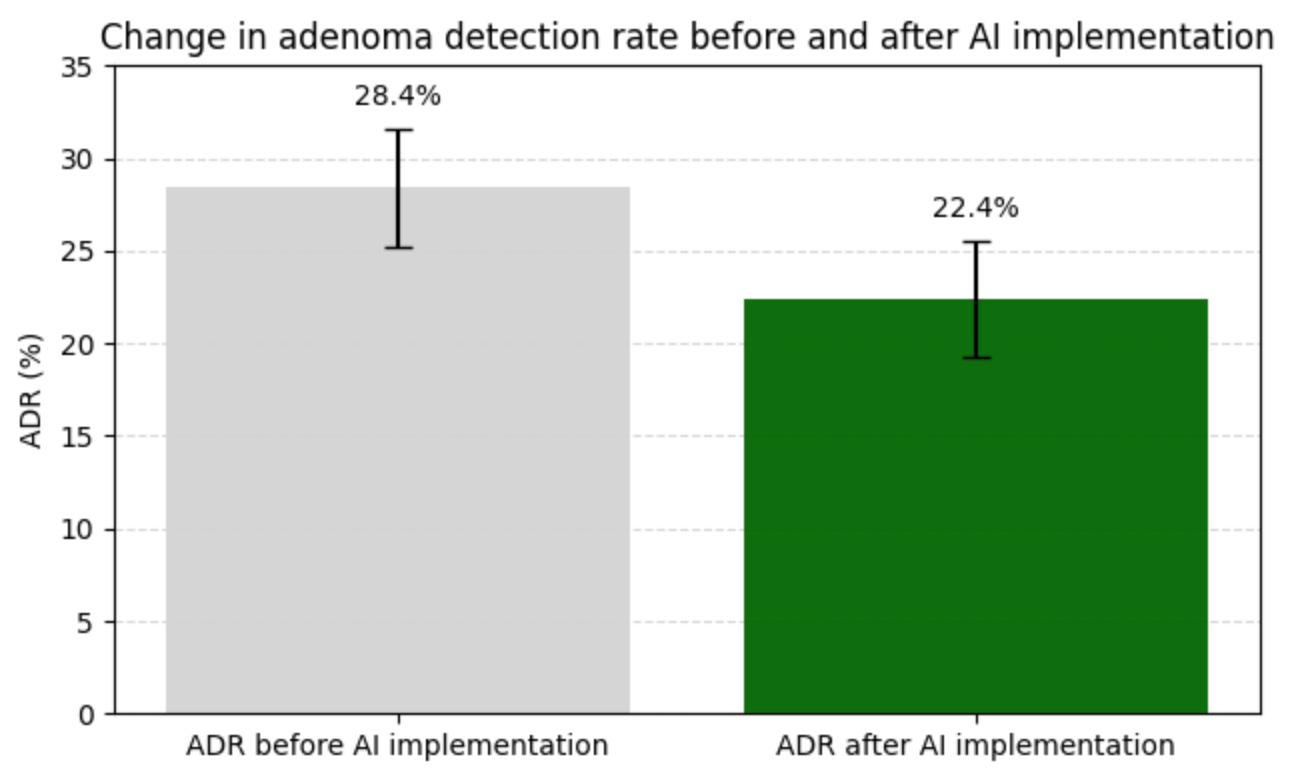

Over time, reliance on automated assessment could also dull clinicians’ own interpretive skills, replacing judgment with trust in the tool. There is some early evidence for this deskilling effect: a recent study in the Lancet found that doctors who spent three months using an A.I. tool to help spot precancerous growths during colonoscopies were significantly worse at finding the growths on their own after the tool was removed.

And beneath all of this sits something harder to quantify. Diagnosis has always been, at least in part, a relational act: it requires attention, context, and the willingness to sit with someone else’s discomfort. When we offload that act to a machine, it risks becoming overly procedural. I’m not making a purely humanist claim here; when pain is recorded automatically, clinicians may ask fewer questions or miss patient cues. The risk is that medical professionals start to see less of the full picture of any given individual patient.

I don’t mean for this article to be pessimistic: these tools have real promise, especially for patients who can’t communicate their pain, and my original article focuses more on the positive, exciting aspects of the frontier of algorithmic pain measurement. But the more we quantitatively measure complex signals like pain, the more tempted we may be to mistake the number for the thing itself. How we choose to use these tools will decide whether AI deepens our understanding of a phenomenon, or causes us to simplify it and stop looking too soon.